Word Representation Methods using Deep Learning

Distributed representations 1: Word embedding

Distributed Representations of Words and Phrases and their Compositionality

Before we advance to distributed representations of words, we first need to understand the “sparse representations”

Understanding Sparse Representations

Examples:

- One-hot encoding

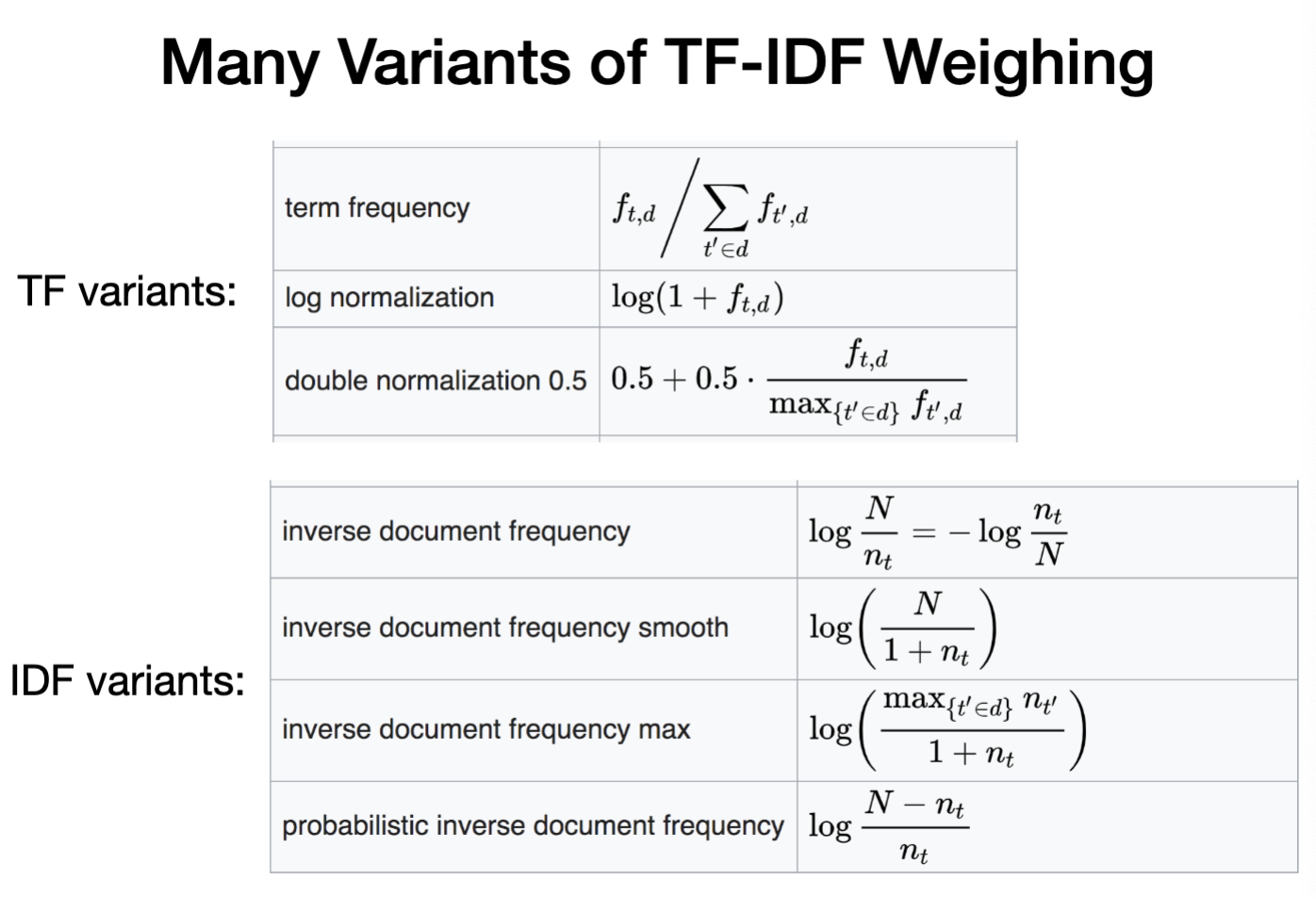

- Bag of words: without order information; representation: a length N vector, N is size of vocabulary. Can be represented as Binary Matrix, Count Matrix or TF-IDF Matrix. Can from bag of words to bag of grams.

Drawbacks:

- not captureing sematic correlations

- vectors are sprse and high-dimensional

Source: DLT lecture notes by Chao Zhang, Georgia Tech

Understanding Dimension Reduction and Topic Modeling

- Latent Semantic Analysis

- Using SVD, mapping data into low-dimensional representation by only selecting top k topics

- Source: LSA Youtube

Understanding word embedding

- word2vec[co-occurrence statistics], Local context window methods

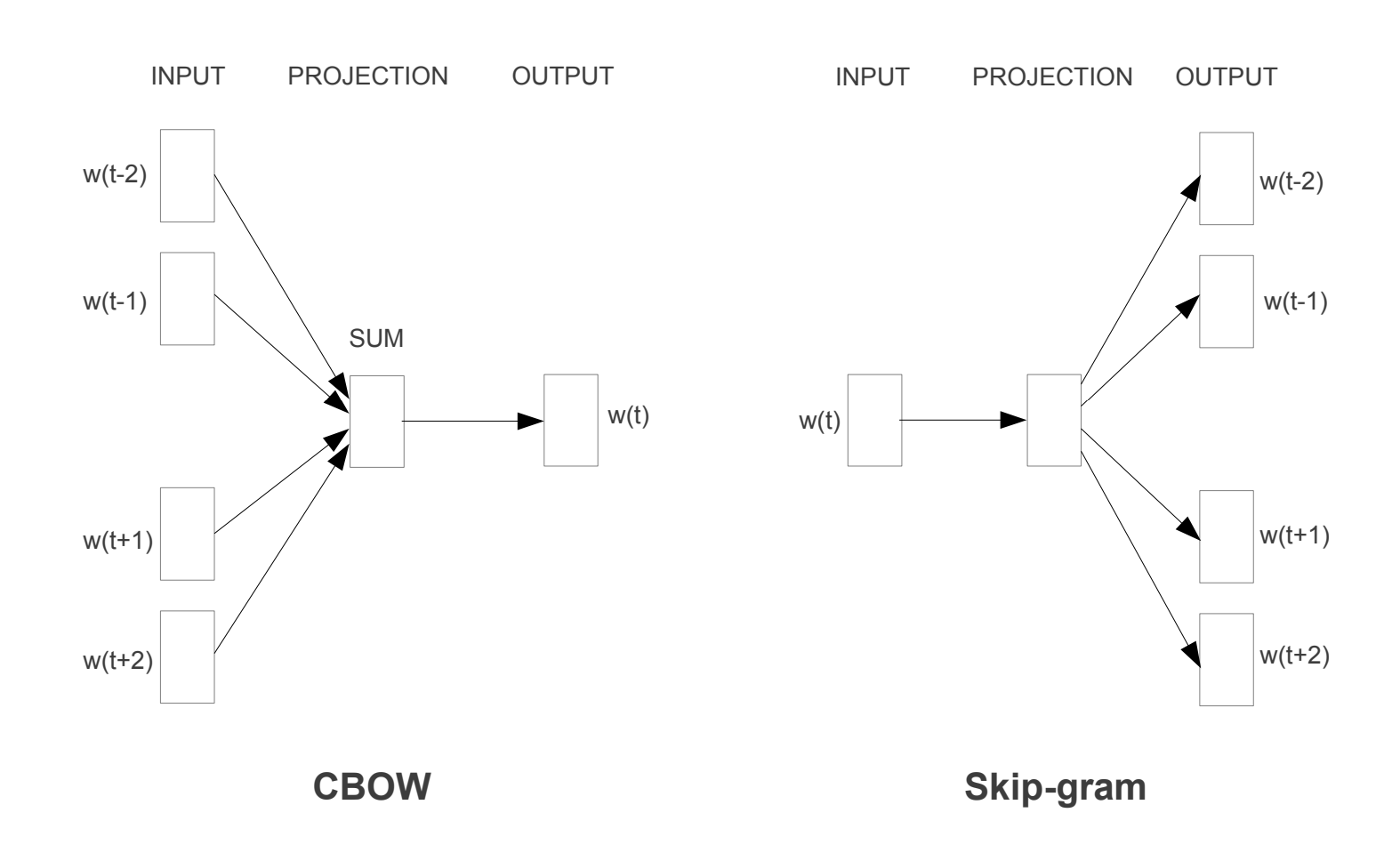

- CBoW: use a window to predict center word

- SkipGarm: use center word to predict surrounding words

- Two layer NN,two weight matrix. each time we will pass one center word, and each word need to be forward pass for k times.

- the hidden layer doesn’t use any activation function, which is directly passed to the output layer. The output layer using softmax probablility, get the word with the highest prob and compare to the output word’s on-hot encoding.

- Objective: find word representations that are useful for predicting the surrounding words.

source: skip-gram, skip-gram youtube, paper link

About this Paper

This paper mainly discussed the extensions of Skip-gram model. First is to use hierarchical softmax to reduce computational complexity. Second is to use negative sampling to reduce noise. Third is to subsampling the frequent word like “a”, “the”.

source: word embedding glove

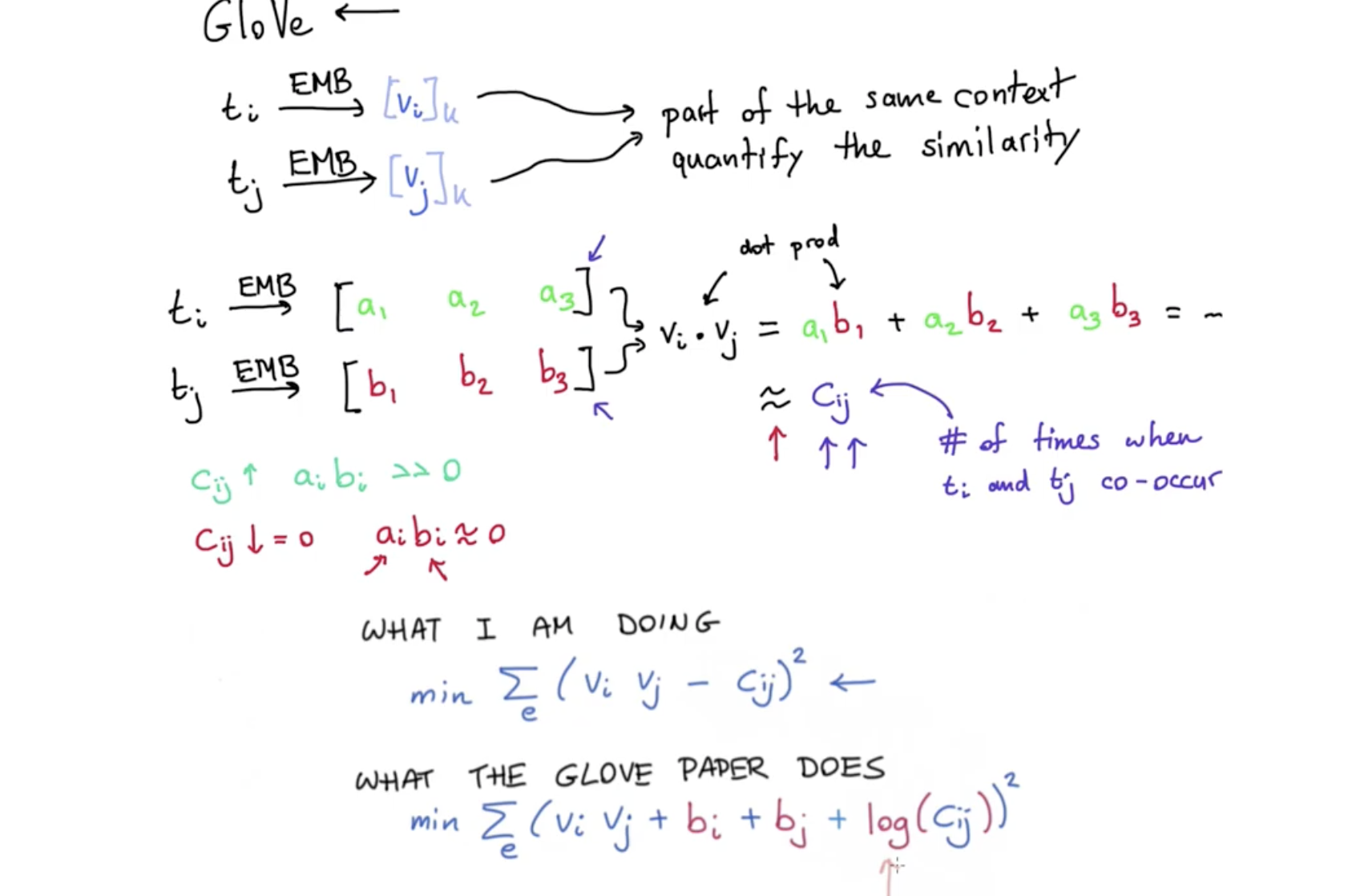

GloVe: Global Vectors for Word Representation

First this paper discussed the drawbacks of LSA and local context window methods:

- LSA: poorly on the word analogy task

- Local context window: poorly utilize statistics of corpus(such as global co-occurrence counts)

- And then it introduces the GloVe:

source: glove youtube, glove medium, glove csdn

Distributed Representation 2: Deep Contextual Representation

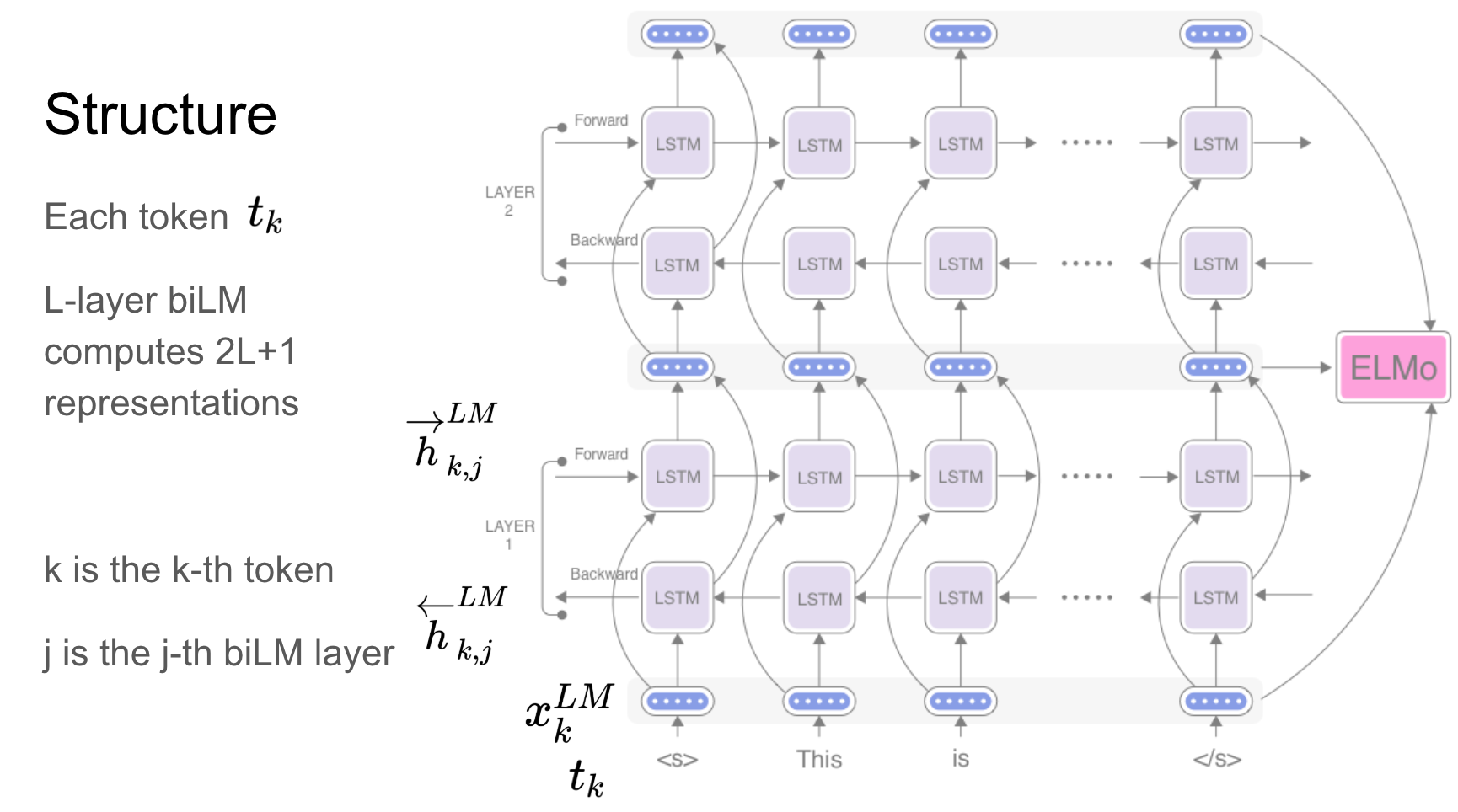

Deep contextualized word representations (ELMo)

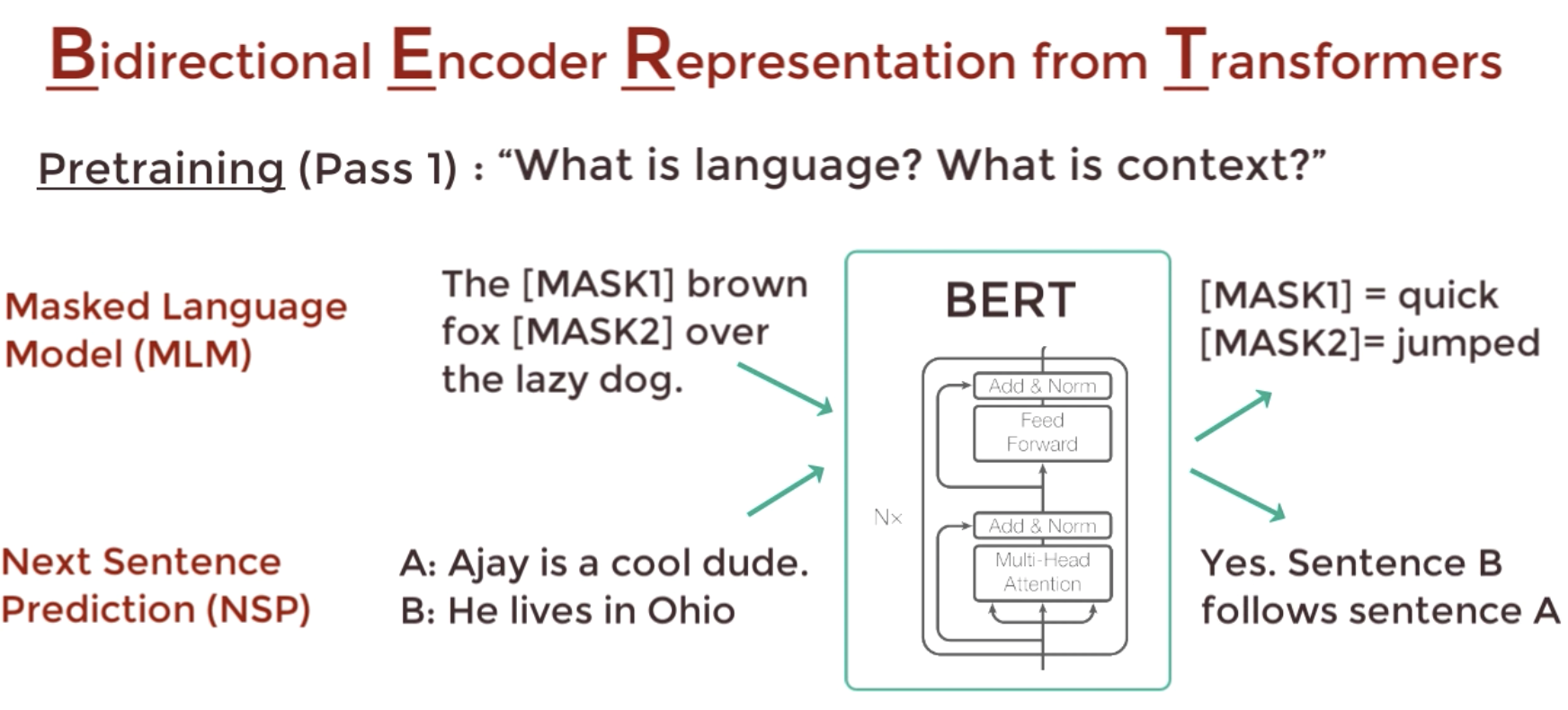

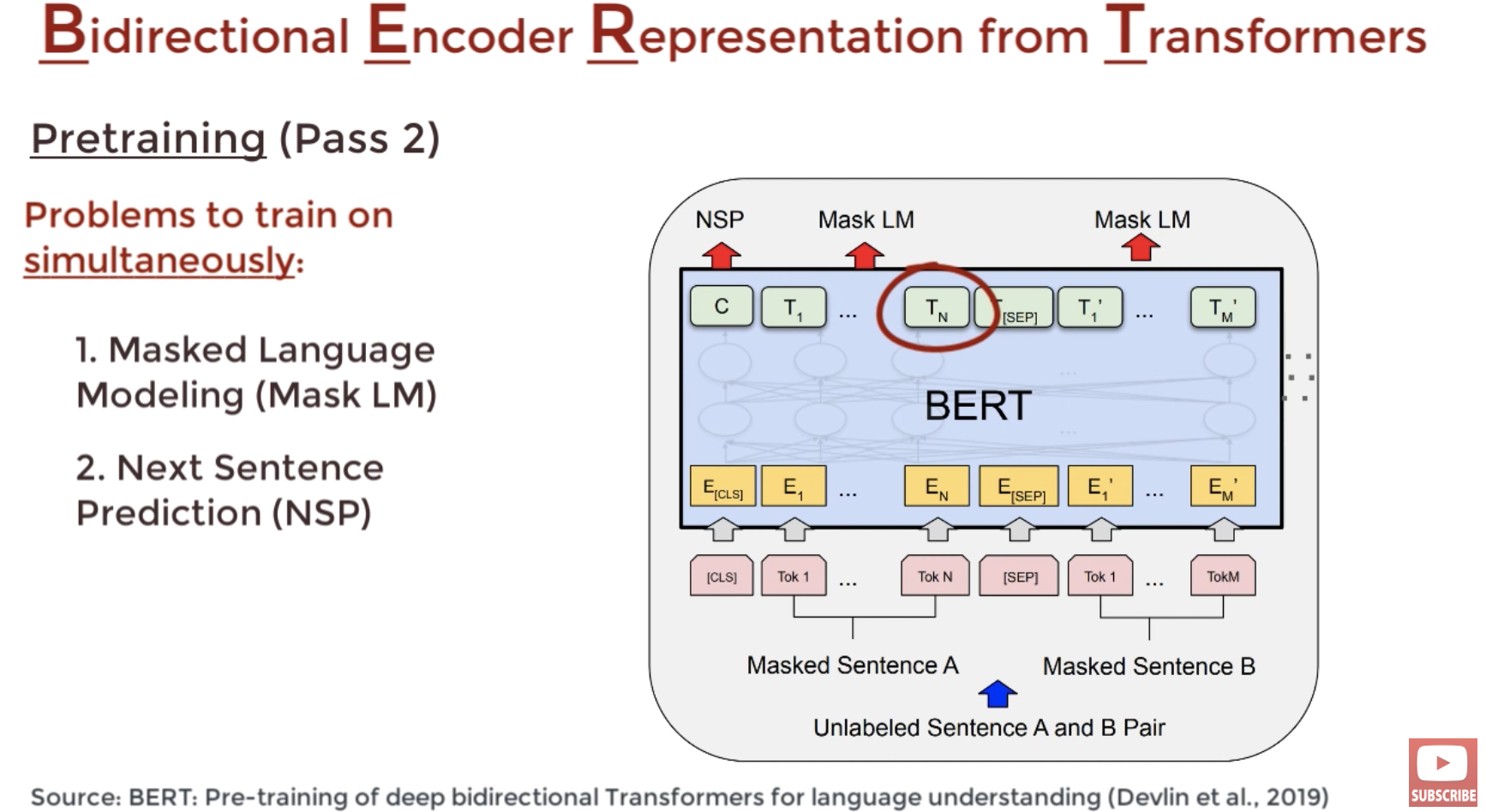

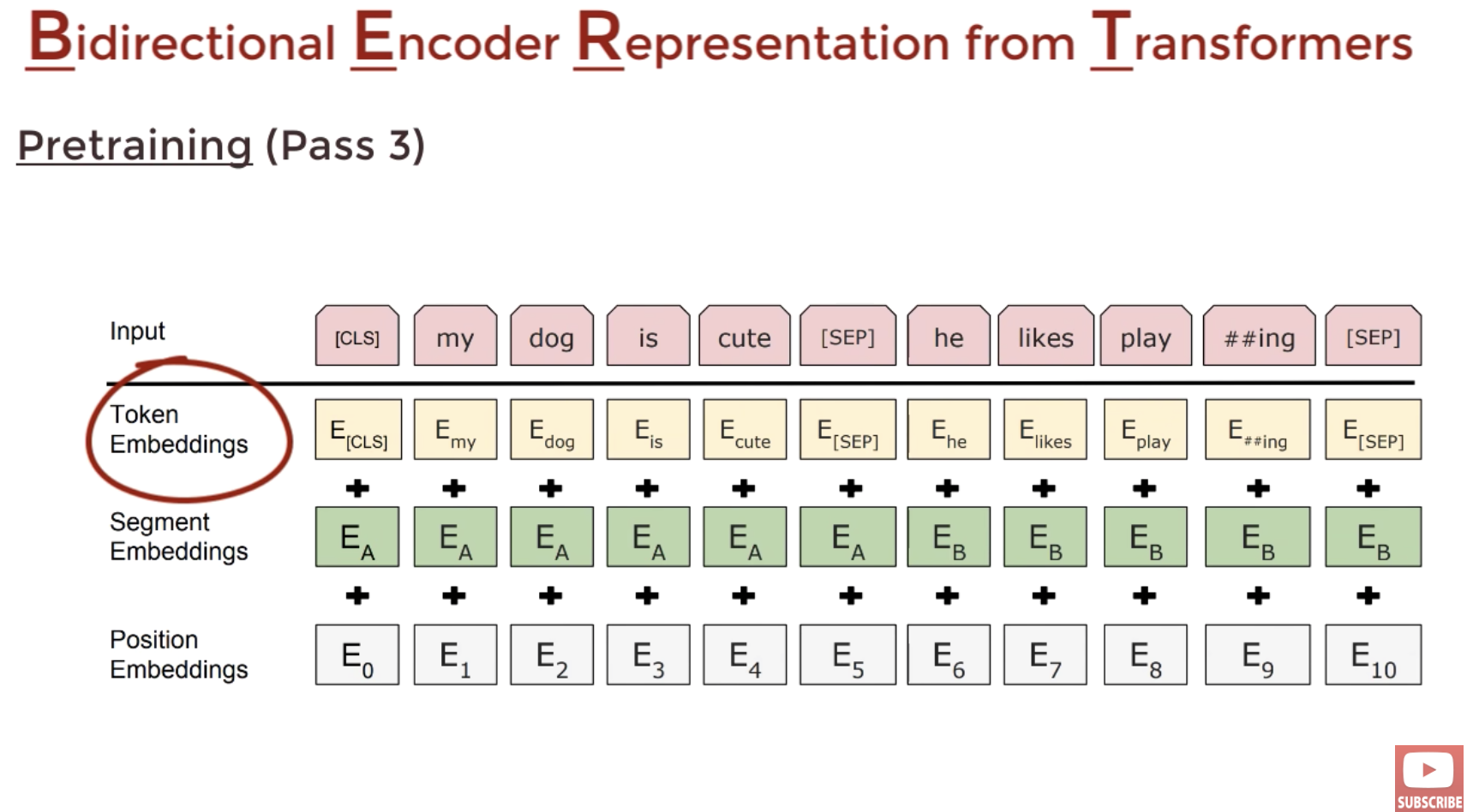

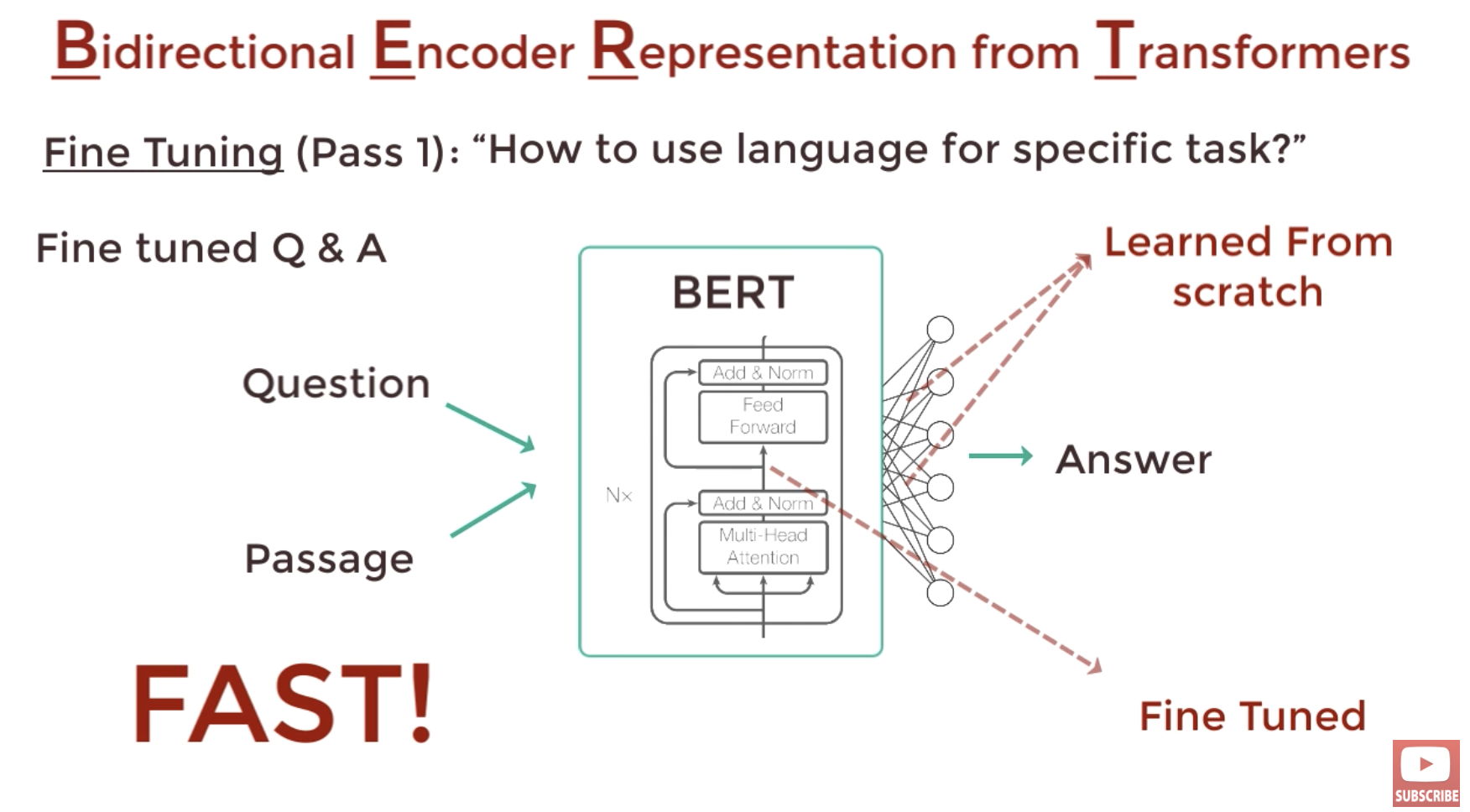

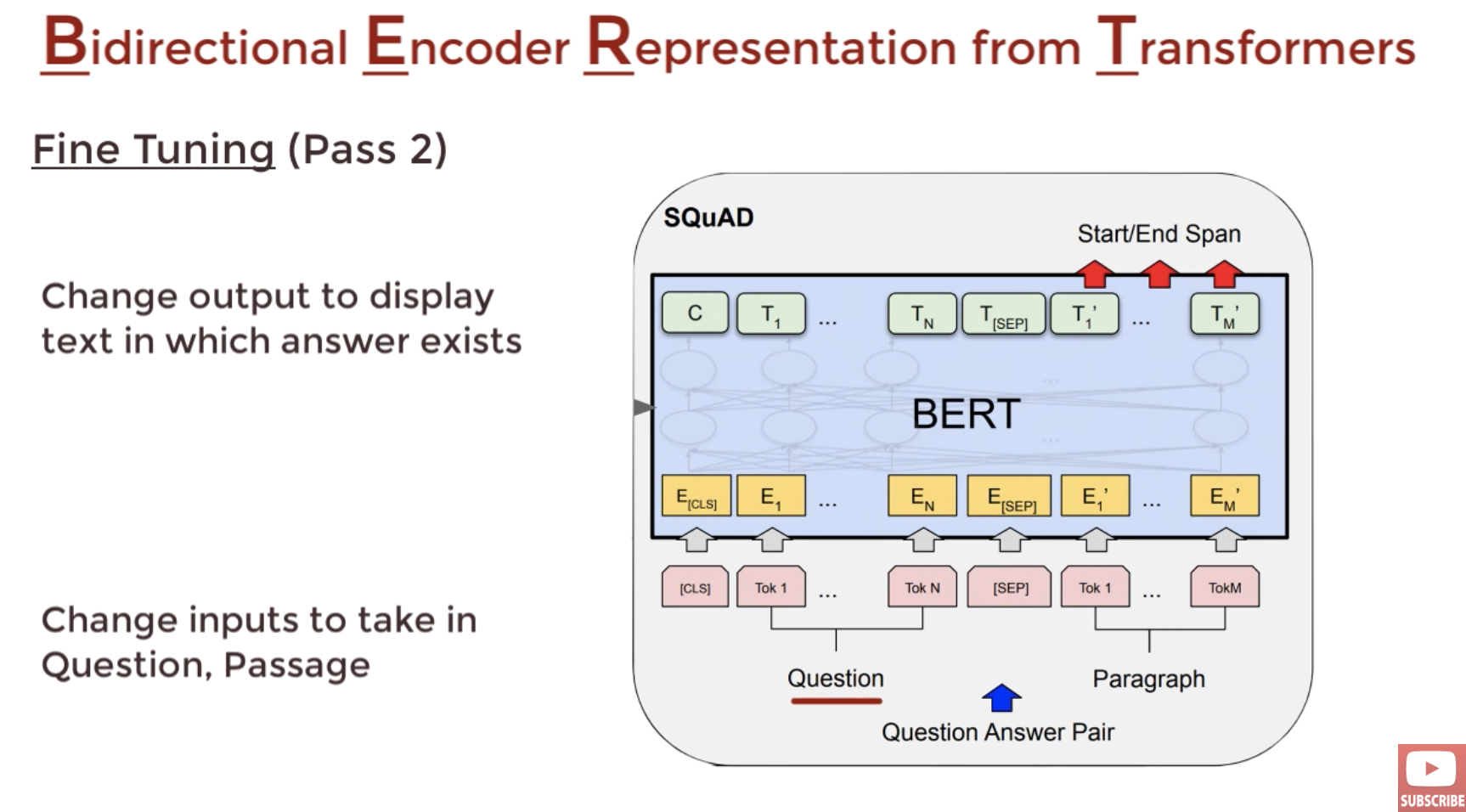

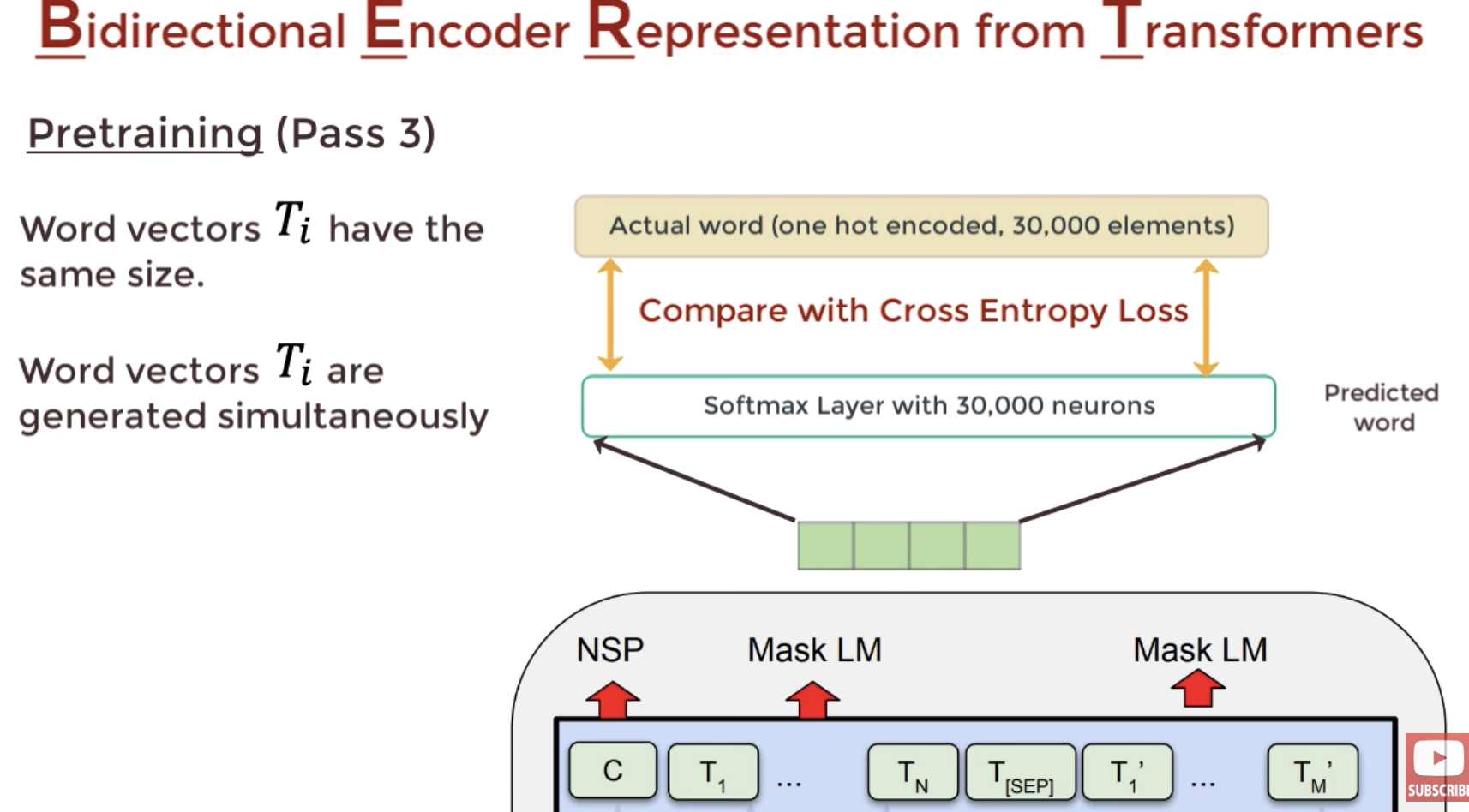

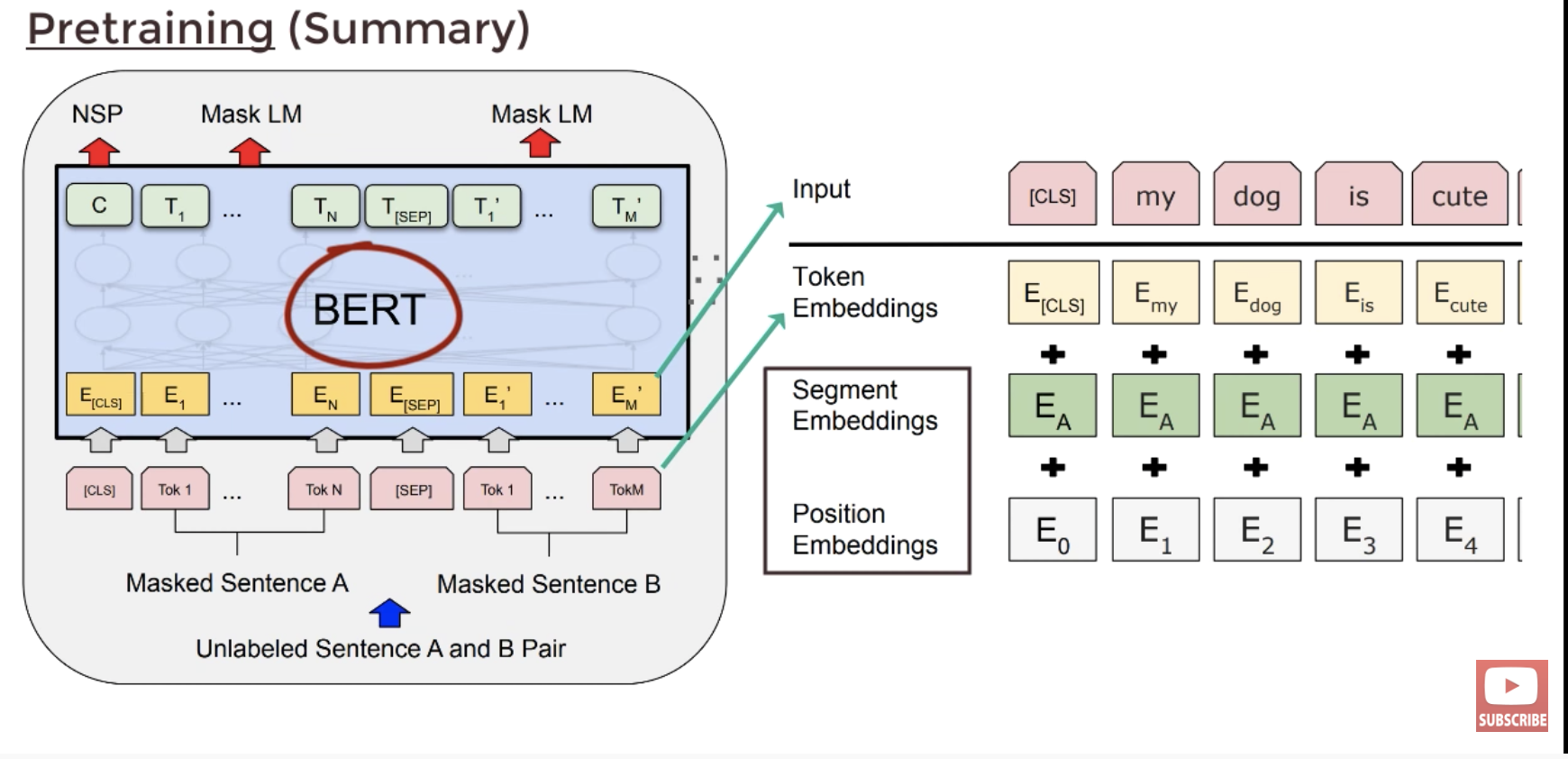

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding